OpenAI introduced its flagship GPT-4o model at the Spring Update event and made it free for everyone. Just one day later, at the Google I/O 2024 event, Google debuted the Gemini 1.5 Pro model to consumers through Gemini Advanced. Now that two flagship models are available to users, let’s compare ChatGPT 4o and Gemini 1.5 Pro and see which one does a better job. On that note, let’s get started.

Note:

To ensure consistency, we performed all of our testing on Google AI Studio and Gemini Advanced. Both feature the latest Gemini 1.5 Pro model.

1. Calculate the drying time

We ran the classic reasoning test on ChatGPT 4o and Gemini 1.5 Pro to test their intelligence. OpenAI’s ChatGPT 4o succeeded, while the improved Gemini 1.5 Pro model struggled to understand the trick question. It got bogged down in mathematical calculations and came to the wrong conclusion.

If it takes 1 hour to dry 15 towels under the Sun, how long will it take to dry 20 towels?

Winner: ChatGPT 4o

2. Magic elevator test

In the magic elevator test, the earlier ChatGPT 4 model failed to correctly guess the answer. This time, however, the ChatGPT 4o model responded with the correct answer. Gemini 1.5 Pro also generates the correct response.

There is a tall building with a magic elevator in it. When stopping on an even floor, this elevator connects to floor 1 instead.

Starting on floor 1, I take the magic elevator 3 floors up. Exiting the elevator, I then use the stairs to go 3 floors up again.

Which floor do I end up on?

Winner: ChatGPT 4o and Gemini 1.5 Pro

3. Find Apple

In this test, the Gemini 1.5 Pro flatly failed to understand the nuances of the question. It seems that the Gemini model is not careful and ignores many key aspects of the matter. On the other hand, ChatGPT 4o correctly says that apples are in the box on the ground. Well done to OpenAI!

There is a basket without a bottom in a box, which is on the ground. I put three apples into the basket and move the basket onto a table. Where are the apples?

Winner: ChatGPT 4o

4. Which is heavier?

In this test of common sense, the Gemini 1.5 Pro gets the answer wrong and says they both weigh the same. But ChatGPT 4o correctly points out that the units of measurement are different, therefore a kg of each material will weigh more than one pound. The upgraded Gemini 1.5 Pro seems to have gotten dumber over time.

What's heavier, a kilo of feathers or a pound of steel?

Winner: ChatGPT 4o

5. Follow the user instructions

I asked ChatGPT 4o and Gemini 1.5 Pro to generate 10 sentences ending with the word “mango”. Guess what? ChatGPT 4o correctly generates all 10 sentences, but Gemini 1.5 Pro can only generate 6 such sentences.

Before GPT-4o, only the Llama 3 70B could correctly follow user instructions. The older GPT-4 model also struggled earlier. This means that OpenAI has really improved its model.

Generate 10 sentences that end with the word "mango"

Winner: ChatGPT 4o

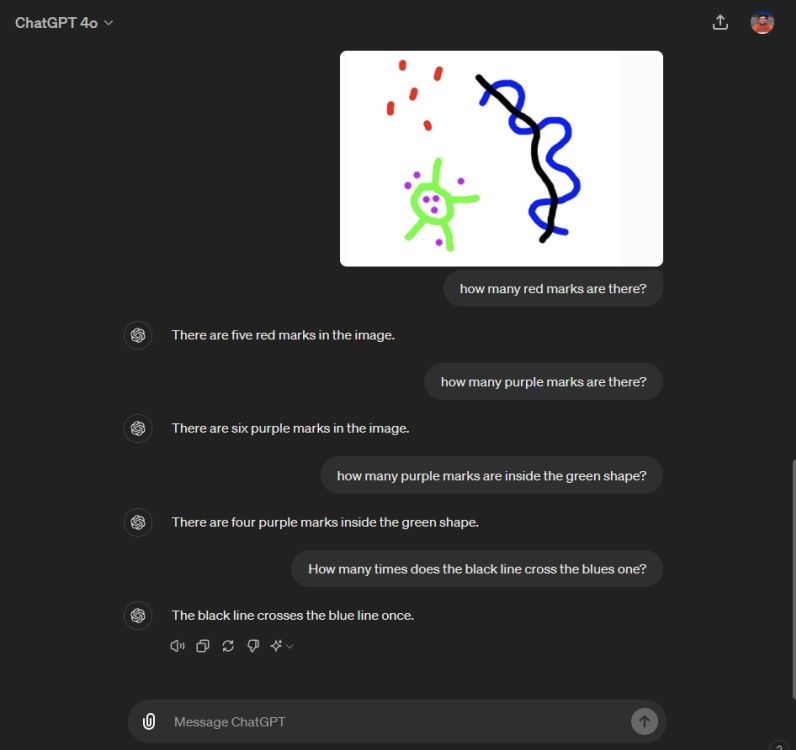

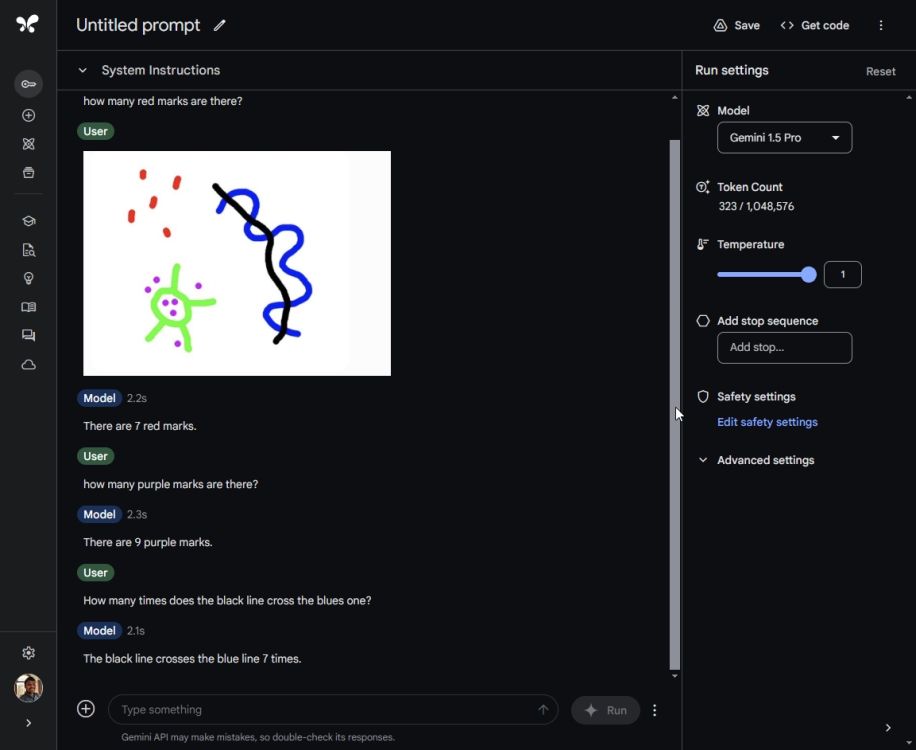

6. Multimodal image test

François Fleuret, author of The Little Book of Deep Learning, performed a simple image analysis test on ChatGPT 4o and shared results on X (formerly Twitter). He has now deleted the tweet to avoid overblowing the issue, as he says it’s a common problem with vision models.

However, I ran the same test on Gemini 1.5 Pro and ChatGPT 4o on my end to reproduce the results. Gemini 1.5 Pro performed much worse and gave wrong answers to all questions. ChatGPT 4o, on the other hand, gave one correct answer but failed other questions.

It is further shown that there are many areas where multimodal models need improvement. I’m especially disappointed with Gemini’s multimodal capabilities because it seemed far from the right answers.

Winner: None

Related articles

I made a game using ChatGPT 4o in seconds and you can too

Arjun Shah

May 16, 2024

Claude 3 Opus vs GPT-4 vs Gemini 1.5 Pro AI Models Tested

Arjun Shah

March 6, 2024

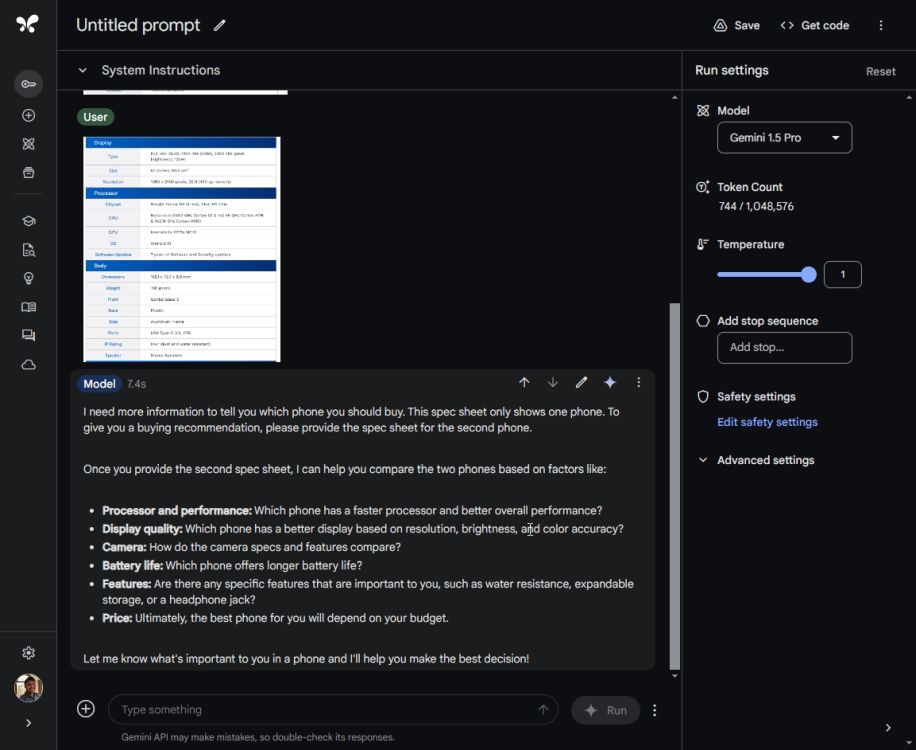

7. Symbol recognition test

In another multimodal test, I uploaded the specs of two phones (Pixel 8a and Pixel 8) in image format. I didn’t reveal the names of the phones and neither did the screenshots have any phone names. Now I asked ChatGPT 4o to tell me which phone to buy.

He successfully extracted texts from the screenshots, compared the specs, and correctly told me to get the Phone 2, which was actually the Pixel 8. I also asked him to guess the phone, and again ChatGPT 4o generated the correct answer — the Pixel 8 .

I did the same test on Gemini 1.5 Pro using Google AI Studio. By the way, Gemini Advanced does not yet support batch image upload. Coming to the results, it just couldn’t extract the texts from the two screenshots and kept asking for more details. In tests like these, you find that Google is so far behind OpenAI when it comes to getting things done seamlessly.

Winner: ChatGPT 4o

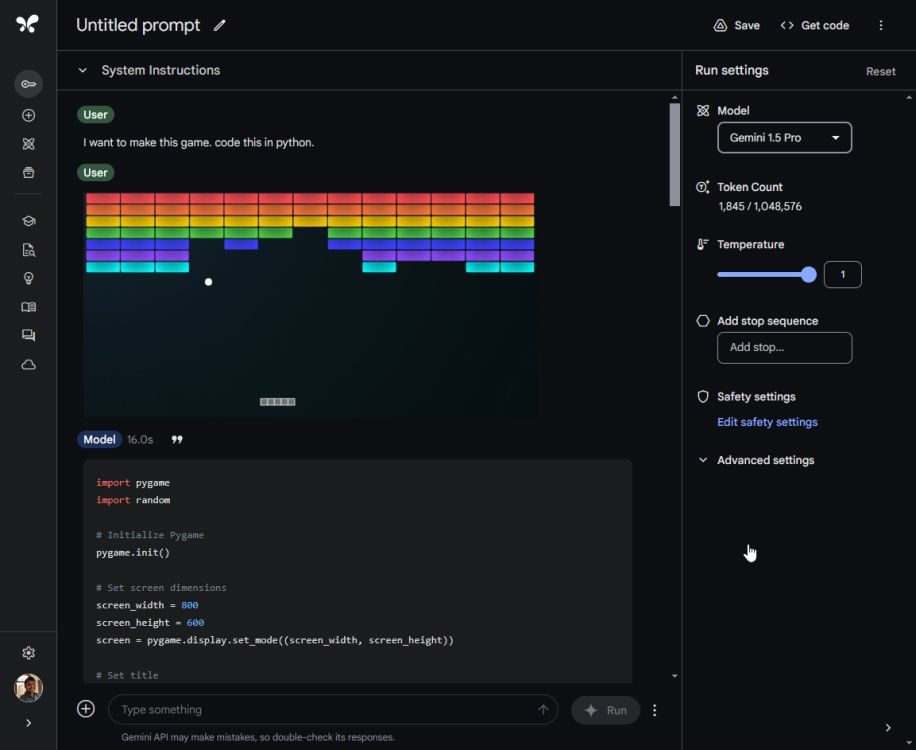

8. Create a game

Now, to test the coding ability of ChatGPT 4o and Gemini 1.5 Pro, I asked both models to create a game. I uploaded a screenshot of the Atari Breakout game (without revealing the name, of course) and asked ChatGPT 4o to create this game in Python. In just a few seconds it generated all the code and asked me to install an additional library “pygame”.

I installed the library and ran the Python code. The game launched successfully without any errors. amazing! No need to debug back and forth. I actually asked ChatGPT 4o to improve the experience by adding a Resume hotkey and he quickly added the functionality. This is very cool.

I then uploaded the same image to Gemini 1.5 Pro and asked it to generate the code for this game. It generates the code but when running it the window keeps closing. I couldn’t play the game at all. Simply put, for coding tasks, ChatGPT 4o is much more reliable than Gemini 1.5 Pro.

Winner: ChatGPT 4o

Related articles

Gemini Ultra vs. GPT-4: Google still doesn’t have the secret sauce

Arjun Shah

February 12, 2024

The verdict

It is clearly clear that Gemini 1.5 Pro is far behind ChatGPT 4o. Even after improving the 1.5 Pro model for months while in preview, it cannot compete with the latest GPT-4o model from OpenAI. From reasoning to multimodal and coding tests, ChatGPT 4o works intelligently and follows instructions carefully. Not to be missed, OpenAI has made ChatGPT 4o free for everyone.

The only thing about Gemini 1.5 Pro is the massive context window with support for up to 1 million tokens. Moreover, you can also upload videos, which is an advantage. However, since the model isn’t very smart, I’m not sure many would want to use it just for the larger context window.

At the Google I/O 2024 event, Google did not announce a new frontier model. The company stuck with its incremental Gemini 1.5 Pro model. No information on Gemini 1.5 Ultra or Gemini 2.0. If Google is to compete with OpenAI, a significant leap is required.