If you signed up for Google I/O, OpenAI Spring Updateor Microsoft Build this month, you’ve probably heard the term AI agents come up quite a bit over the past month. They are quickly becoming the next big thing in technology, but what exactly are they? And why was everyone talking about them all of a sudden?

Google CEO Sundar Pichai described an artificial intelligence system that can return a pair of shoes on your behalf while I was on stage at Google I/O. At Microsoft, the company announced Copilot AI systems that can act independently as virtual employees. Meanwhile, OpenAI has unveiled an AI system, the GPT-4 Omni, that can see, hear and speak. Previously, OpenAI CEO Sam Altman had this to say to MIT Technology beneficial agents possess the best potential of the technology. These types of systems are the new standards that all AI companies are trying to achieve, but that’s easier said than done.

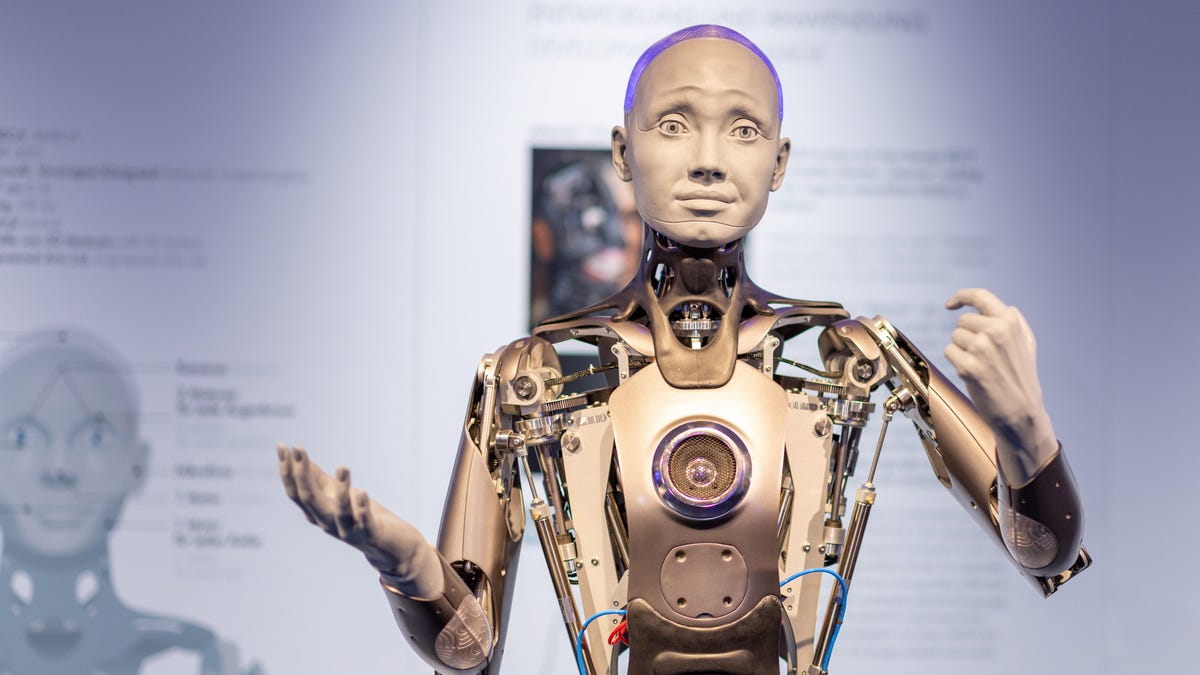

Simply put, AI agents are simply AI models that do something independently. It’s like Jarvis from Iron ManTars from Interstaller, or HAL 9000 from A space odyssey. They go a step further than just creating a response like the chatbots we’ve come to know – there’s action. To start, Google, Microsoft and OpenAI are trying to develop agents that can handle digital actions. This means that they teach AI agents to work with different APIs on your computer. Ideally, they can push buttons, make decisions, autonomously monitor channels and send requests.

“I agree that the future is in agents,” said Echo AI founder and CEO Alexander Kwame. His company builds AI agents that analyze businesses’ conversations with customers and provide insights on how to improve that experience. “The industry has been talking about this for years and it still hasn’t materialized. It’s just such a difficult problem.

Kvamme says a truly agentic system would need to make dozens or hundreds of decisions independently, which is a difficult thing to automate. To return a pair of shoes, for example, as Google’s Pichai explained, an AI agent might need to scan your email to look for a receipt, retrieve your order number and address, fill out a return form, and perform various actions from your name. In this process, there are many decisions that you don’t even think about, but you subconsciously make them.

As we have seen, large language models (LLMs) are not perfect even in controlled environments. Altman’s new favorite thing is to call ChatGPT “incredibly dumb” and he’s not entirely wrong. When you ask LLMs to work independently on the open internet, they are error prone. But it’s what countless startups are working on, including Echo AI, as well as larger companies like Google, OpenAI, and Microsoft.

If you can create agents digitally, there isn’t much of a barrier to creating agents that work with the physical world as well. You just need to program this task on a robot. Then you really get into the sci-fi stuff, as AI agents offer the potential to task robots with a task like “take the order to this table” or “install all the shingles on this roof.” We’re a long way from there, but the first step is to teach AI agents to perform simple digital tasks.

There’s an oft-discussed problem in the world of AI agents: making sure you’re not designing an agent to perform a task too good. If you’ve set up a shoe return agent, you’ll need to make sure they don’t return all your shoes, or perhaps all the things you have receipts for in your Gmail inbox. As silly as it sounds, there is a small but vocal cohort of AI researchers who worry that overly determined AI agents could spell doom for human civilization. I suppose when you’re creating things like science fiction, that’s a valid concern.

On the other side of the spectrum are the optimists, like Echo AI, who believe this technology will provide more opportunities. This divergence in the AI community is quite obvious, but optimists see a liberating effect with AI agents that is comparable to the personal computer.

“I strongly believe that much of the work that [agents] that they will decide is work that people would rather not do,” Queme said. “And there’s a higher value to their time in their life. But again, they have to adapt.

Another use case for AI agents is self-driving cars. Tesla and Waymo are currently leading the way in this technology, where cars use AI technology to navigate city streets and highways. Although niche, self-driving technology is a fairly developed area of AI agents where we are already seeing AI at work in the real world.

So what will get us to this future where AI can put your shoes back on? First, the underlying AI models probably need to get better and more accurate. This means that updates to ChatGPT, Gemini and Copilot will likely precede fully functional agent systems. AI chatbots still have to overcome their massive size problem with hallucinations, which many researchers do not see an answer to solve. But there should also be updates to the agent systems themselves. OpenAI’s GPT store is currently the most successful effort to develop an agent network, but even that is still not very advanced.

While advanced AI agents are definitely not here yet, this is the goal of many large and small AI companies these days. This could be what makes AI significantly more useful in our daily lives. Although it sounds like science fiction, billions of dollars are being spent to make agents a reality in our lifetime. However, this holds great promise for AI companies struggling to get chatbots to reliably answer basic questions.