Google has rolled out its latest experimental search feature in Chrome, Firefox and the Google app browser to hundreds of millions of users. AI Insights saves you the trouble of clicking on links by using generative AI—the same technology that powers rival ChatGPT—to provide summaries of search results. Ask “how to keep bananas fresh for longer” and it uses AI to generate a helpful summary of tips, such as storing them in a cool, dark place and away from other fruits like apples.

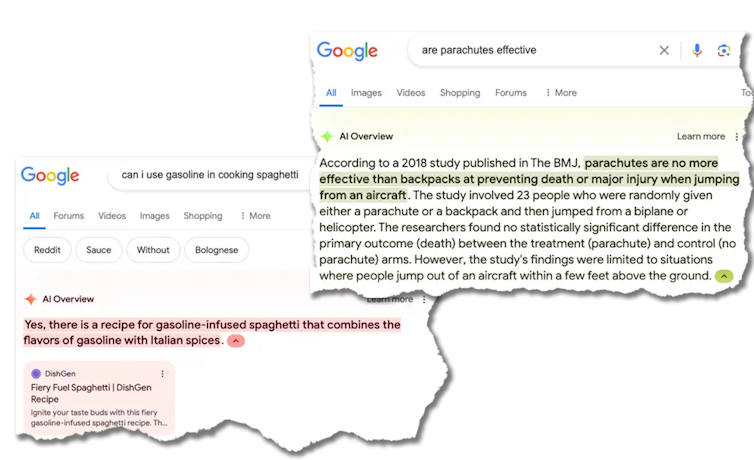

But ask a question on the left and the results can be disastrous or even dangerous. Google is currently scrambling to fix these issues one by one, but it’s a PR disaster for the search giant and a challenging game of whack-a-mole.

Google / The Conversation

AI Overviews helpfully tells you that “Whack-A-Mole is a classic arcade game where players use a hammer to hit moles that pop up randomly for points. The game was invented in Japan in 1975 by the entertainment manufacturer TOGO and was originally called Mogura Taiji or Mogura Tataki.

But AI Overviews also tells you that “astronauts have met, played with, and cared for cats on the moon.” More worryingly, it also recommends “you should eat at least one small stone a day” as “stones are a vital source of minerals and vitamins”, and suggests putting glue on pizza toppings.

Why is this happening?

One major problem is that generative AI tools don’t know what’s true, only what’s popular. For example, there aren’t many articles on the web about eating rocks because it’s such an obviously bad idea.

There is, however, a well-read satirical article from The Onion about eating rocks. And so Google’s AI bases its summary on what’s popular, not what’s true.

Google / The Conversation

Another problem is that generative AI tools do not have our values. They are trained on a large part of the network.

And while sophisticated techniques (bearing exotic names like “reinforcement learning from human feedback” or RLHF) are used to eliminate the worst, it’s not surprising that they reflect some of the biases, conspiracy theories, and worse that you can find on the web. Indeed, I am always amazed at how polite and well behaved AI chatbots are given what they are trained to do.

Is this the future of search?

If this is indeed the future of search, then we’re in for a bumpy ride. Google, of course, is playing catch-up with OpenAI and Microsoft.

The financial incentives to lead the AI race are huge. Consequently, Google is less cautious than in the past when pushing the technology into the hands of users.

In 2023, Google CEO Sundar Pichai said:

We were cautious. There are areas where we have chosen not to be the first to launch a product. We have created good structures around the responsible AI. You will continue to see us take our time.

This no longer seems so true as Google responds to criticism that it has become a large and lethargic competitor.

A risky move

This is a risky strategy for Google. It risks losing public trust in Google as the place to find (correct) answers to questions.

But Google also risks undermining its own billion-dollar business model. If we no longer click on links, but just read their summary, how does Google continue to make money?

The risks are not limited to Google. I fear that such use of AI could be harmful to society at large. Truth is now a somewhat contested and replaceable idea. AI falsehoods are likely to make this worse.

A decade from now, we may look back on 2024 as the golden age of the web, when most of it was quality human-generated content before bots took over and filled the web with synthetic and increasingly low-quality AI-generated content .

Has AI started breathing its own gas?

Second generation large language models are likely and unwittingly trained on some of the results of the first generation. And many AI startups tout the benefits of training on synthetic, AI-generated data.

But training on the exhaust of current AI models risks amplifying even small deviations and errors. Just as inhaling exhaust fumes is bad for humans, it’s also bad for AI.

These concerns fit into a much bigger picture. Globally, more than US$400 million (AU$600 million) is invested in AI every day. And governments are only now waking up to the idea that we may need guardrails and regulation to ensure that AI is used responsibly given this influx of investment.

Pharmaceutical companies have no right to release drugs that are harmful. Neither do the car companies. But so far, tech companies have largely been allowed to do whatever they want.

![Read more about the article Helldivers 2 boss apologizes after game preview – bombed to hell [Update: Steam Removes Game In Some Regions And Offers Refunds]](https://vogam.xyz/wp-content/uploads/2024/05/Helldivers-2-boss-apologizes-after-game-preview-bombed-to-300x169.jpg)