Aurich Lawson

Members of the legendary Computer Error Tribunal, guests of honor, if I may address you? I would humbly propose a new contender for your esteemed decision. You may or may not find it novel, you may even like to call it a “bug”, but I assure you that you will find it entertaining.

Think it over NetHack. This is one of the all-time roguelike games, and I mean that in the strictest sense of the term. Content is procedurally generated, deaths are constant, and the only thing you keep from game to game is your skills and knowledge. I understand that the only thing two roguelike fans can agree on is how wrong the third roguelike fan is in their definition of roguelike, but please, let’s move on.

NetHack is great for machine learning…

As a challenging game full of sequential choices and random challenges, as well as a “single agent” game that can be generated and played at lightning speed on modern computers, NetHack is great for those working in machine learning – or indeed simulation learning, as detailed in Jens Tuyls’ article on how computational scaling affects the learning of single-agent games. Using the Tuyls expert model NetHack behavior, Bartłomiej Cupiał and Maciej Wołczyk trained a neural network to play and improve itself using reinforcement learning.

By mid-May of this year, the pair’s model was consistently scoring 5,000 points on their own metrics. Then, in one run, the model suddenly deteriorated, on the order of 40 percent. He scored 3000 points. Machine learning in general is gradually moving in the same direction with these types of problems. It didn’t make sense.

Cupiał and Wołczyk tried quite a few things: rolling back their code, restoring their entire software stack from a Singularity backup, and rolling back their CUDA libraries. The result? 3000 points. They reset everything from scratch and are still 3000 points.

NetHackplayed by a common man.

… except on certain nights

As described in Cupiał’s (ex-Twitter) X thread, it was a few hours of confused trial and error by him and Wołczyk. “I’m starting to feel crazy. I can’t even watch a TV show, constantly thinking about the mistake,” writes Cupiał. In his desperation, he asks the author of the Tuyls model if he knows what’s wrong. He wakes up in Krakow with the answer:

“Oh, yes, it must be a full moon today.”

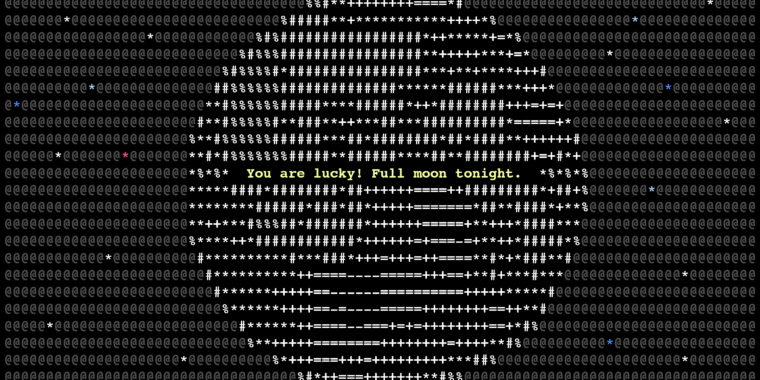

in NetHack, the game where the DevTeam has thought of everything, if the game detects from your system clock that it should be a full moon, it will generate a message: “You’re in luck! Full moon tonight.” A full moon gives the player several benefits: one point added to luck, and animal creatures mostly stay in their animal forms.

It’s an easier game all things considered, so why would the training agent’s score be lower? It simply doesn’t have data for the full moon variables in its training data, so the branching series of solutions probably leads to poorer results or just plain confusion. It was indeed a full moon Krakow when the 3000’s results started showing up. What a terrible night to have a school model.

Of course, the “score” is not a real indicator of success in NetHack, as Cupiał himself notes. Ask a model to get the best score and it’ll get the hell out of early stage monsters because it never gets bored. “Finding items needed for [ascension] or even [just] doing a quest is too much for a pure RL agent,” Cupiał writes. Another neural network, AutoAscend, does a better job of advancing the game, but “even it can only solve sokoban and get to the end of the mines,” he notes Cupiał.

Is this a bug?

I give you this though NetHack reacted to the full moon in the intended way, this strange, very hard-to-understand stop on a machine learning journey was indeed a mistake and worthy of the pantheon. It’s not a Harvard moth, nor a 500-mile email, but what is?

Because the team used Singularity to back up and restore their stack, they inadvertently brought forward machine time and the resulting error every time they tried to resolve it. The resulting behavior of the machine was so strange and seemingly based on unseen forces that it sent a coder into fits. And a story has a beginning, a climactic middle, and a denouement that teaches us something, however obscure.

The NetHack In my opinion, the Lunar Learning Bug deserves to be a monument. Thank you for your time.