My task for our AI overlords was simple: help me break New York Times Spelling contest.

I had spent a good part of a Saturday night trying to fit the letters G, Y, A, L, P, O and N into as many words as possible. But three hours, 141 points, and 37 words—including “nonapology,” “lagoon,” and “analogy”—later, I had hit a wall. A few more words were all I needed to advance to the “genius” echelon of the Spelling Bee, the title reserved for those who unscramble 70 percent of all possible words using the given letters, and the point at which the puzzle considered effectively resolved. (100 percent solvers get the queen bee rank, but even I have limitations.)

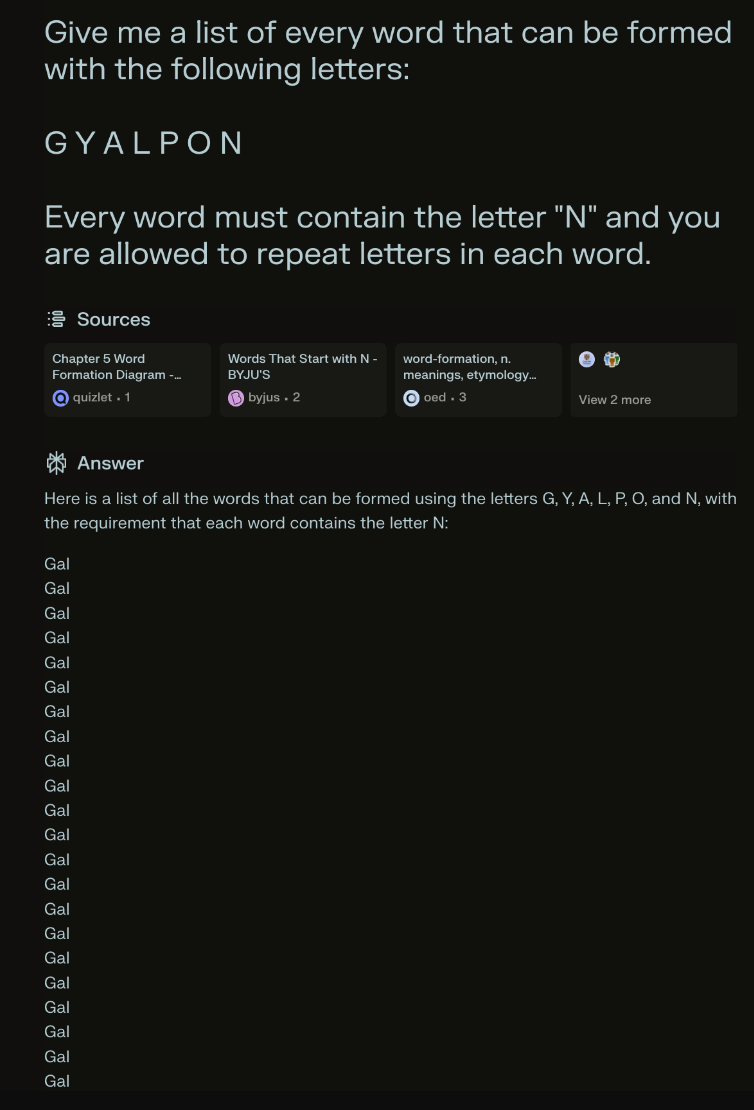

My human mind was obviously struggling, but this task seemed like child’s play to the AI, so I fired up ChatGPT, told it I was trying to win the Spelling Bee, gave it my letters, and laid out the rules. “Give me a list of every word that can be formed with them,” I wrote. “You are allowed to repeat letters, but every word must contain an ‘N’.”

When I hit enter, this is what ChatGPT spit out in response:

Here is a list of words formed with the letters “GYALPON” that must contain the letter “N”:

GLNPAYO

YPNL

PGNOIL

ONAPILG

LGNAPYO

ANLEY

THE MOST

HIPPO

AOGPLNY

PNAOGLI

This is only a sampling of the list due to the large number of possible permutations.

Not a great start.

“The words should be in the dictionary,” I wrote back. “Sure,” came the reply. “I will provide a list of valid English words based on the given letters.” ANGLE, GALLON, TONGUE.

Obviously that didn’t work, so I turned to Microsoft’s Copilot (YAN, GAME, PLANNING, BORING), The Google twins (GAPON, GON, JIAN), and Claude from Anthropic (MANGO, CONTINUED, GRASS 17. LEJ). Meta AI helpfully told me that it took care to only include words that were recognized by dictionaries in a list it contained NALYP and NAGI, while Perplexity – a chatbot with ambitions to kill Google Search – just writes GAL hundreds of times before it froze abruptly.

AI can now create images, video and audio as fast as you can type in descriptions of what you want. Can write poetry, essays and term papers. It can also be a pale imitation of your girlfriend, your therapist, and your personal assistant. And many people think it’s poised to automate people out of work and transform the world in ways we can hardly imagine. So why is solving a simple word puzzle so bad?

The answer lies in how large language models work, the underlying technology that powers our modern AI obsession. Computer programming is traditionally logical and rule-based; you enter commands that the computer follows according to a set of instructions, and it provides valid output. But machine learning, a subset of which generative AI is, is different.

“It’s purely statistical,” Noah Gianciracusa, a professor of mathematics and data science at Bentley University, told me. “It’s really about extracting patterns from data and then pushing out new data that closely matches those patterns.”

OpenAI hasn’t officially responded, but a company spokesperson told me that this type of “feedback” has helped OpenAI improve its understanding of the model and its responses to problems. Microsoft and Meta declined to comment. Google, Anthropic and Perplexity did not respond by the time of publication.

At the heart of large language models are “transformers,” a technical breakthrough made by researchers at Google in 2017. After you enter a prompt, a large language model breaks words, or parts of those words, into mathematical units called “tokens.” Transformers are able to analyze each token in the context of the larger data set on which a model is trained to see how they relate to each other. Once the transformer understands these connections, it is able to respond to your prompt by guessing the next likely character in a sequence. The Financial Times there’s a great animated explainer that breaks it all down if you’re interested.

I a thought I gave the chatbots precise instructions to generate my Spelling Bee words, all they did was convert my words into tokens and use transformers to spit out plausible answers. “It’s not the same as computer programming or typing a command at the DOS prompt,” Giansiracuzza said. “Your words were translated into numbers and then processed statistically.” It seems that a purely logic-based query is exactly the worst application for AI skills—much like trying to turn a screw with a resource-intensive hammer.

The success of an AI model also depends on the data it is trained on. That’s why AI companies are frantically making deals with news publishers right now—the fresher the training data, the better the answers. Generative AI, for example, is lousy at suggesting chess moves, but is at least slightly better at the task of solving word puzzles. Giansiracusa points out that the abundance of chess games available on the Internet almost certainly fed into the training data for existing AI models. “I suspect that there simply aren’t enough annotated Spelling Bee games online for the AI to train on as there are chess games,” he said.

“If your chatbot seems more confused by a word game than a cat with a Rubik’s cube, it’s because it hasn’t been specifically trained to play complex word games,” said Sandy Bessen, artificial intelligence researcher at Neudesic, a artificial intelligence owned by IBM. “Word games have specific rules and restrictions that the model would struggle to follow unless specifically instructed during training, fine-tuning, or prompting.”

“If your chatbot seems more confused by a word game than a cat with a Rubik’s cube, it’s because it hasn’t been specifically trained to play complex word games.”

None of this has stopped the world’s leading AI companies from touting the technology as a panacea, often greatly exaggerating the claims of its capabilities. In April, both OpenAI and Meta boasted that their new AI models would be able to “reason” and “plan.” In an interview, OpenAI COO Brad Lightcap told the Financial Times that the next generation of GPT, the AI model that powers ChatGPT, will show progress in solving “hard problems” like reasoning. Joel Pino, Meta’s vice president of artificial intelligence research, told the publication that the company is “hard at work figuring out how to make these models not just talk, but actually think, plan…have memory.”

My repeated attempts to get GPT-4o and Llama 3 to crack the Spelling Bee failed spectacularly. When I said this to ChatGPT GALLON, LONG and ANGLE were not in the dictionary, the chatbot said it agreed with me and suggested GALVANOPY instead. When I misspelled the world “safe” as “sur” in my response to Meta AI’s suggestion to invent more words, the chatbot told me that “sur” was really another word that could be formed with the letters G, Y , A, L, P, O and N.

Clearly, we are still a long way from artificial general intelligence, the nebulous concept describing the moment when machines are capable of performing most tasks as well or better than human beings. Some experts, such as Yann LeCun, Meta’s chief artificial intelligence scientist, have been outspoken about the limitations of large language models, arguing that they will never reach human-level intelligence because they don’t actually use logic. At an event in London last year, LeCun said the current generation of AI models “just don’t understand how the world works. They are incapable of planning. They’re not capable of real reasoning,” he said. “We don’t have fully autonomous, self-driving cars that can be taught to drive in about 20 hours of practice, something a 17-year-old can do.”

Giansiracusa, however, takes a more cautious tone. “We don’t really know how people think, do we? We don’t know what intelligence really is. I don’t know if my brain is just a big statistical calculator, sort of a more efficient version of a big language model.

Perhaps the key to living with generative AI without succumbing to either noise or anxiety is simply to understand its inherent limitations. “These tools aren’t really designed for a lot of things that people use them for,” said Chirag Shah, a professor of AI and machine learning at the University of Washington. He co-authored a high-profile research paper in 2022 criticizing the use of large language patterns in search engines. Tech companies, Shah believes, could do a much better job of being transparent about what AI can and can’t do before forcing it on us. However, that ship may have already sailed. Over the past few months, the world’s biggest tech companies—Microsoft, Meta, Samsung, Apple, and Google—have made announcements about weaving AI tightly into their products, services, and operating systems.

“Bots are bad because they’re not meant to be,” Shah said of my pun conundrum. It remains to be seen if they’re sick of all the other problems tech companies throw at them.

How else have AI chatbots failed you? Email me at pranav.dixit@engadget.com and let me know!