NVIDIA has demonstrated a new approach to real-time neural material models that offers a whopping 12-24x speedup in shading performance compared to traditional methods.

NVIDIA uses artificial intelligence to improve real-time model rendering, neural approach provides up to 24x boost compared to traditional methods

At Siggraph, NVIDIA demonstrated a new real-time rendering approach called “Neural Appearance Models” that aims to use AI to accelerate shading capabilities. Last year the company unveiled its neural compression technique that unlocks 16x texture detail, and this year the company is moving on to accelerate texture rendering and shading performance by a huge leap.

The new approach will be a universal execution mode for all materials from multiple sources, including real objects captured by artists, measurements or generated from text prompts using Generative AI. These models will be scalable at different quality levels ranging from PC/console gaming, virtual reality and even movie rendering.

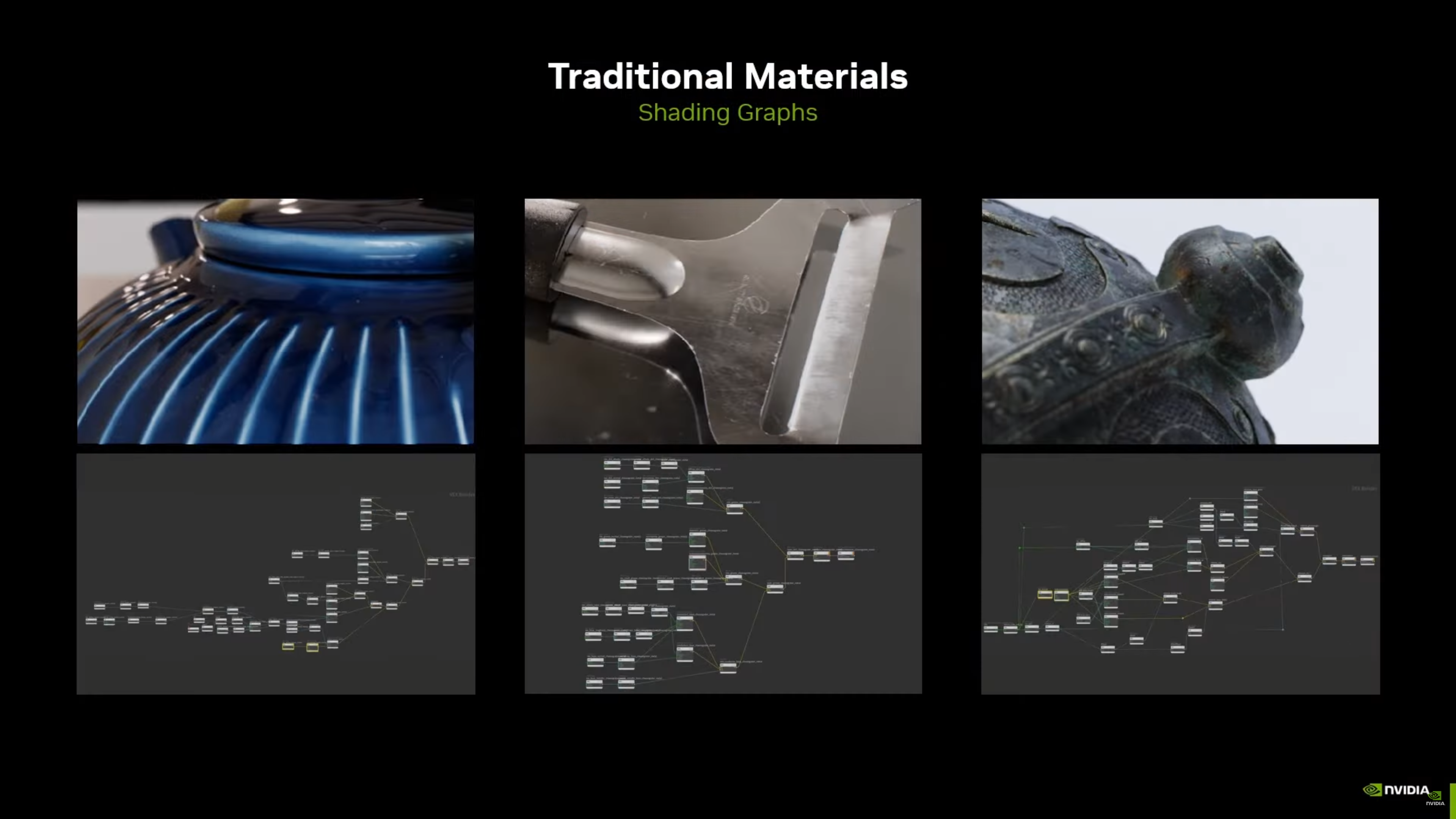

The model will help capture every single detail of the subject to be rendered, such as incredibly delicate details and visual complexities such as dust, water spots, lighting, and even the rays cast by the mix of different light sources and colors. Traditionally, these models would be rendered using shader graphics, which are not only expensive to render in real-time, but also involve complexity.

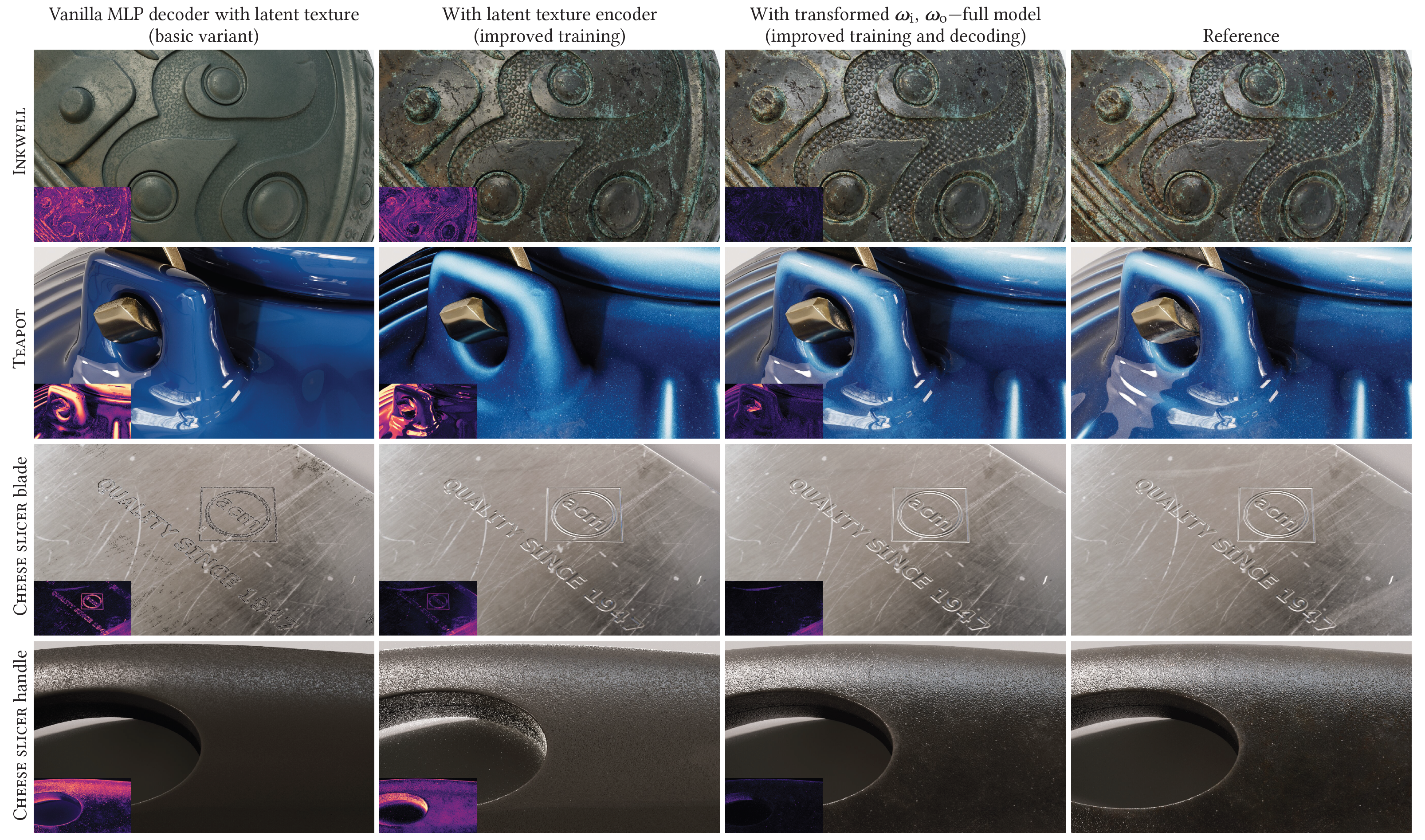

With NVIDIA’s “Neural Materials” approach, the traditional material rendering model is replaced with a cheaper and more computationally efficient neural network, which the company says will enable up to 12-24x faster shading calculations. The company offers a comparison between a model rendered using the shading graph and the same model rendered with the Neural Materials model.

The model matches the details of the reference image in every way, and as mentioned above, it does so much faster. You can also view each model and compare the image quality for yourself at this link.

The new model achieves the following innovations:

- a complete and scalable system for film-quality neural materials

- malleable learning for gigatexel-sized assets using an encoder

- priority decoders for normal mapping and sampling

- and efficient implementation of neural networks in real-time shaders

The company also explains how neural models work. With Render Time, the neural material is very similar to using a traditional model. At each hit point, they first search for textures and then evaluate two MLPs, one to obtain the BRDF value and the second to import and sample the output direction. Some enhancements to the real-time approach include built-in graphics presets that improve output quality and encoder training time to render the visualizations at massive resolutions.

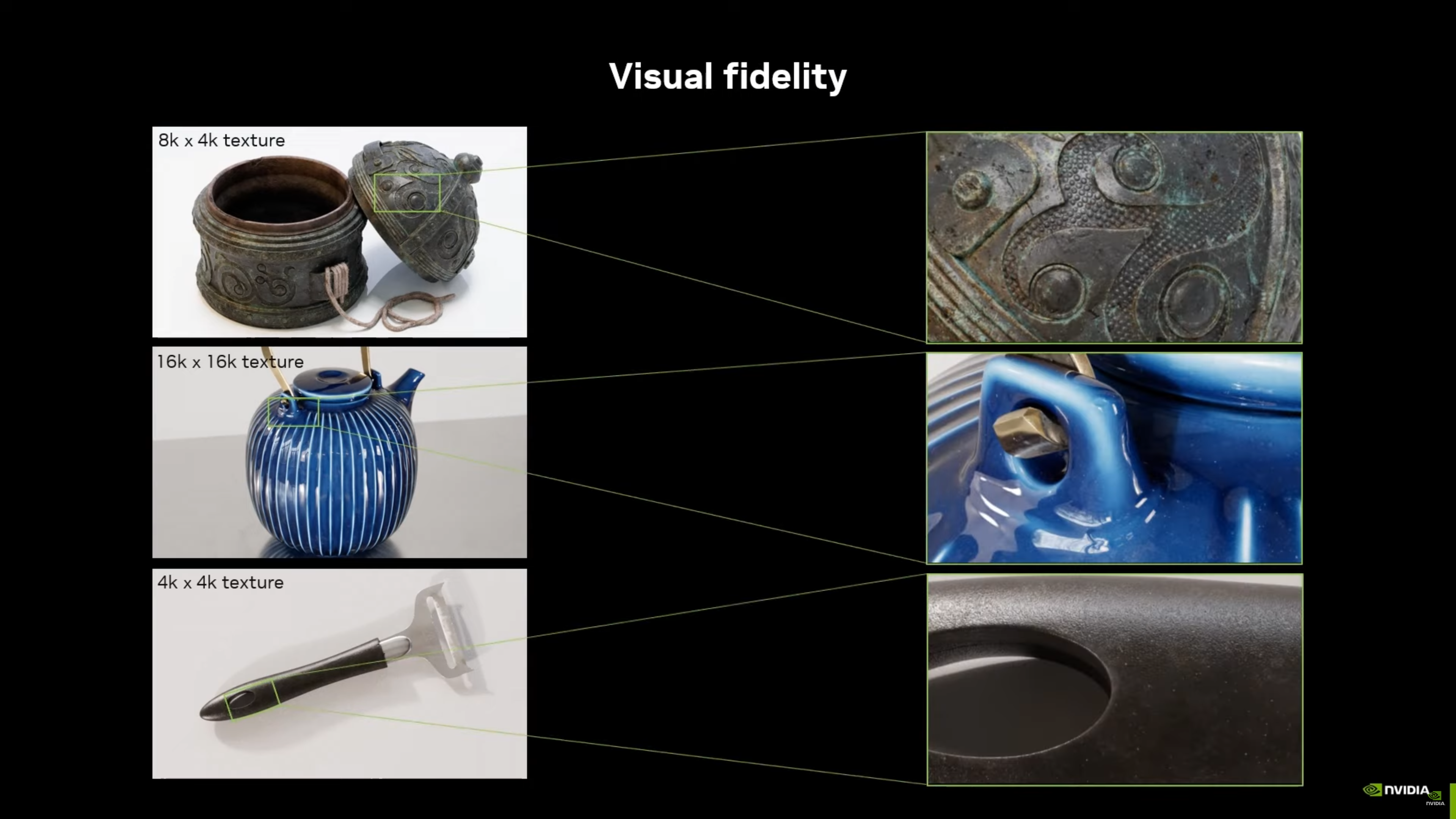

All models rendered using the “Neural Materials” approach offer up to 16K texture resolution, offering deep and detailed objects in games. These advanced models are less demanding on games as well, resulting in better performance than what was possible before.

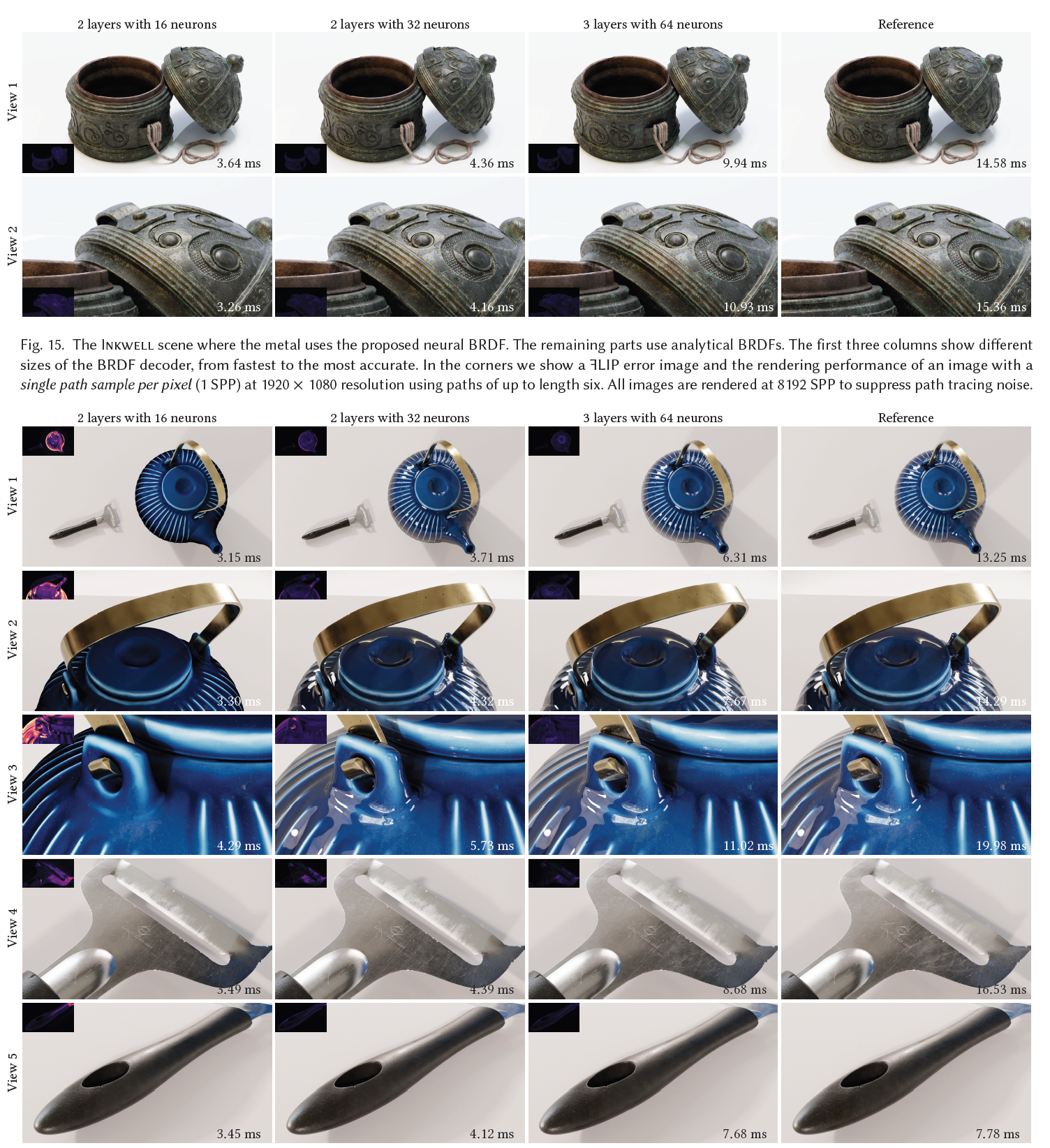

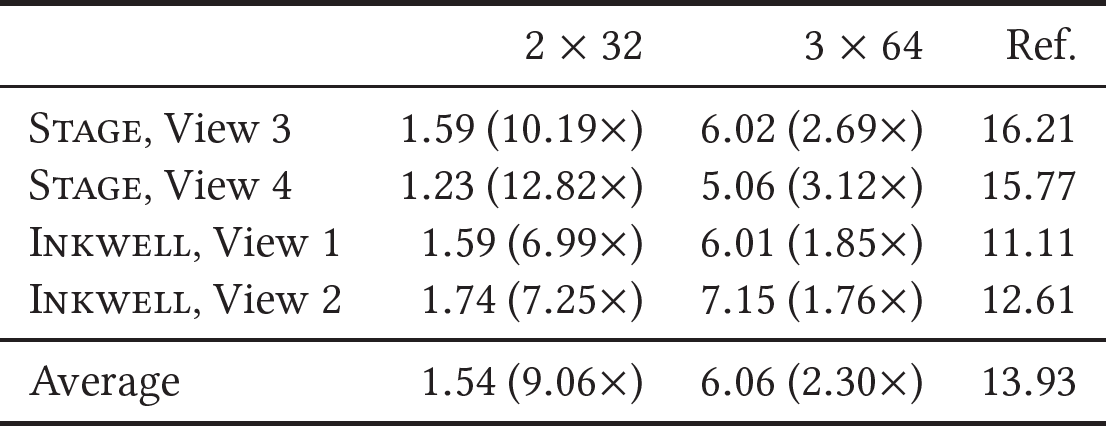

Having textures built on neural models running faster allows NVIDIA to scale them to different applications. In a side-by-side comparison, NVIDIA shows two models, one with 2 layers (w/16 neurons) rendered in just 3.3 ms, while a slightly more detailed model rendered with 3 layers (w/64 neurons) still rendered in 11 ms.

As for what hardware will support neural material models, NVIDIA says it will use existing machine learning frameworks like PyTorch and TensorFlow, tools like GLSL or HLSL, and hardware-accelerated matrix multiple-accumulate (MMA) engines on range-topping GPU architectures such as AMD, Intel and NVIDIA. The runtime shader will compile the description of the neural material into an optimized shared code using the open source Slang shading language, which has a backend for various purposes, including Vulkan, Direct3D 12 and CUDA.

The Tensor-core architecture introduced in modern graphics architecture also provides a step forward for these models, and while currently limited to compute APIs, NVIDIA exposes Tensor-core shader acceleration as a modified open source DirectX Shader compiler based on LLVN, which adds custom internals for low-level access, allowing them to efficiently generate shared Slang code.

Performance demonstrated using an NVIDIA GeForce RTX 4090 GPU using hardware-accelerated DXR (Ray Tracing) at 1920×1080. The model rendering time is given in ms, and the results show that the new neural approach renders images much faster and with better detail than the reference performance. In full-frame rendering with Neural BRDF, the 4090 achieves 1.64x faster performance with 3×64 and 4.14x speedup with 2×16 model parameters. Material shading performance using Path Tracing appears to be 1.54x speedup with 2×32 and 6.06x speedup with 3×64 parameters.

Overall, the new approach to NVIDIA Neural Materials models looks to redefine how textures and objects will be rendered in real-time. With 12-24x acceleration, it will allow developers and content creators to generate materials and objects faster with ultra-realistic textures that also run quickly on the latest hardware. We can’t wait to see this approach used in upcoming games and apps.

![Read more about the article Pixel 8a vs. Pixel 7a: Small steps in an expected direction [Video]](https://vogam.xyz/wp-content/uploads/2024/05/1715981122_Pixel-8a-vs-Pixel-7a-Small-steps-in-an-expected-300x157.jpg)