Take, for example, a photo of Catherine, Princess of Wales, released by Kensington Palace in March. News organizations retracted it after experts noticed some obvious manipulations. And some are questioning whether the images captured during the assassination attempt on former President Donald Trump are real.

Here are a few things experts suggest the next time you come across an image that makes you wonder.

I’m zooming in

It may sound basic, but a study by researcher Sophie Nightingale of Lancaster University in the UK found that across age groups, people who took the time to zoom in on pictures and carefully examine different parts were better at spotting altered images.

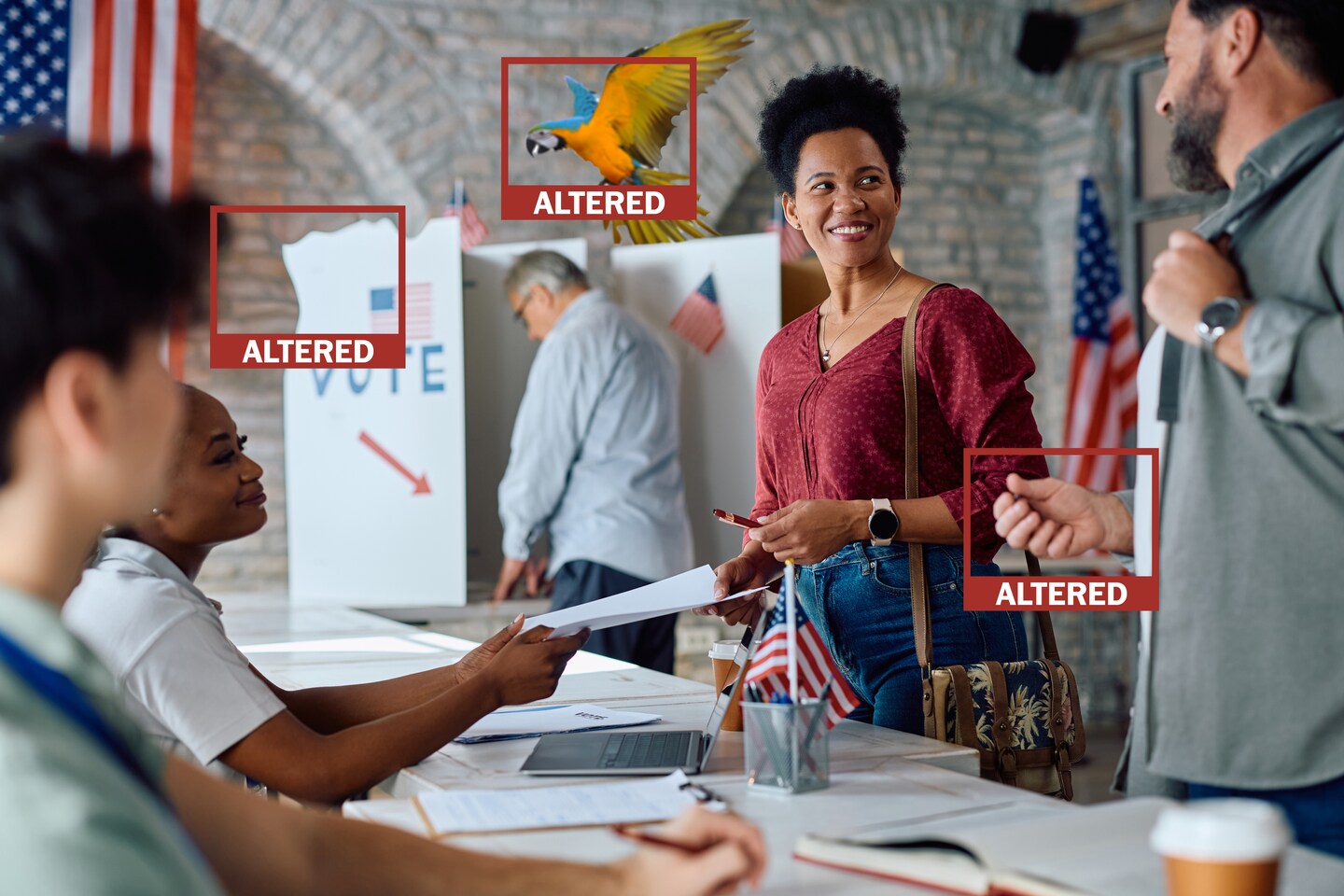

Try it the next time you get a weird feeling about a photo. Just make sure you don’t focus on the wrong things. To help, we’ve created this (slightly exaggerated) sample image to highlight some common signs of image manipulation.

Instead of focusing on things like shadows and lighting, Nightingale suggested looking at “photometric” clues like blurring around the edges of objects, which might suggest they were added later; noticeable pixelation in some parts of the image but not in others; and differences in coloring.

Consider this parrot: For example, who brings a parrot to a polling place?

And take a closer look at his wings; the blurred edges of its leading feathers contrast with the rounded cutouts closer to its body. This is clearly an amateur Photoshop job.

Looking for funky geometry

Fine details are among the hardest things to edit smoothly in an image, so they often get distorted. This is often easy to see when regular, repeating patterns are disrupted or distorted.

In the image below, notice how the brick shapes in the wall behind the divider are distorted and crushed. Something strange happened here.

Consider the infamous photo of Princess Catherine.

The princess appeared with her arms around her two children. Online sleuths were quick to point out inconsistencies, including floor tiles that appeared to overlap and some molding that appeared misaligned.

In our polling place example, did you realize that this person has an extra finger? Of course, it’s possible to have a condition like polydactyly, where people are born with extra fingers or toes. However, this is unlikely, so if you notice things like extra digits, it could be a sign that AI has been used to alter the image.

It’s not just bad Photoshoping that screws up the fine lines. AI is notoriously unreliable when it comes to manipulating detailed images.

So far, this is especially true for structures like the human hand – although it’s getting better at them. However, it is not uncommon for images generated by or edited with AI to show the wrong number of fingers.

Consider the context

One way to determine the authenticity of an image is to step back and look at what’s around it. The context in which an image is placed can tell you a lot about the intent behind sharing it. Consider the social media post we created below about our changed image.

Ask yourself: Do you know anything about the person who shared the photo? Attached to a post that seems intended to elicit an emotional response? What does the caption say, if any?

Some fake images, or even real images placed in a context that differs from reality, are meant to appeal to our “intuitive, gut thinking,” says Peter Adams, senior vice president of research and design at the News Literacy Project, a a non-profit organization that promotes critical media evaluation. These edits can artificially evoke support or sympathy for specific causes.

Nightingale recommends asking yourself a few questions when you see an image that excites you: “Why might someone have posted this? Is there some ulterior motive that might suggest this might be a fake?”

In many cases, adding Adams, comments or replies attached to the photo can reveal a fake for what it is.

Here’s a real-life example pulled from X. An AI-generated image of Trump surrounded by six young black men first appeared in October 2023, but resurfaced in January attached to a post that points out that the former president stopped his motorcade to meet the men in an impromptu meet and greet.

But it wasn’t long before commentators pointed out inconsistencies, such as the fact that Trump appeared to have only three big fingers on his right hand.

Go to the source

In some cases, real images come out of the blue in a way that makes us wonder if they really happened. Finding the source of these images can help shed important light.

Earlier this year, science teacher Bill Nye appeared on the cover of Time Out New York, dressed more stylishly than the baby blue lab coat many of us remember. Some wondered if the images were AI-generated, but tracing the credits back to the photographer’s Instagram account revealed that Science Guy was indeed it was wearing sophisticated, youthful clothes.

For images that claim to come from a real news event, it’s also worth checking out news services like the Associated Press and Reuters and companies like Getty Images—all of which let you peek at the editorial images they’ve captured.

If you happen to find the original image, you are looking authentic.

Try a reverse image search

If an image looks uncharacteristic of the person in it, looks markedly biased, or just generally doesn’t pass the vibration check, image flipping tools—like TinEye or Google Image Search—can help you find the originals. Even if they can’t, these tools can still infer valuable context about the image.

Here’s a recent example: Shortly after a 20-year-old gunman tried to kill Trump, an image surfaced on the Meta-owned social media service Threads that depicted Secret Service agents smiling as they clung to the former president. This image was used to support the baseless theory that the shooting was staged.

The original photo does not contain a single visible smile.

Even armed with these tips, you’re unlikely to distinguish real images from manipulated ones 100 percent of the time. But that doesn’t mean you shouldn’t keep your sense of skepticism honed. This is part of the work we all have to do sometimes to remember that even in conflicting and confusing times, the factual truth still exists.

Losing sight of that, Nightingale says, only enables bad actors to “dismiss everything.”

“That’s where the public is really at risk,” she said.

Editing by Carly Domb Sadoff and Yoon-Hee Kim.