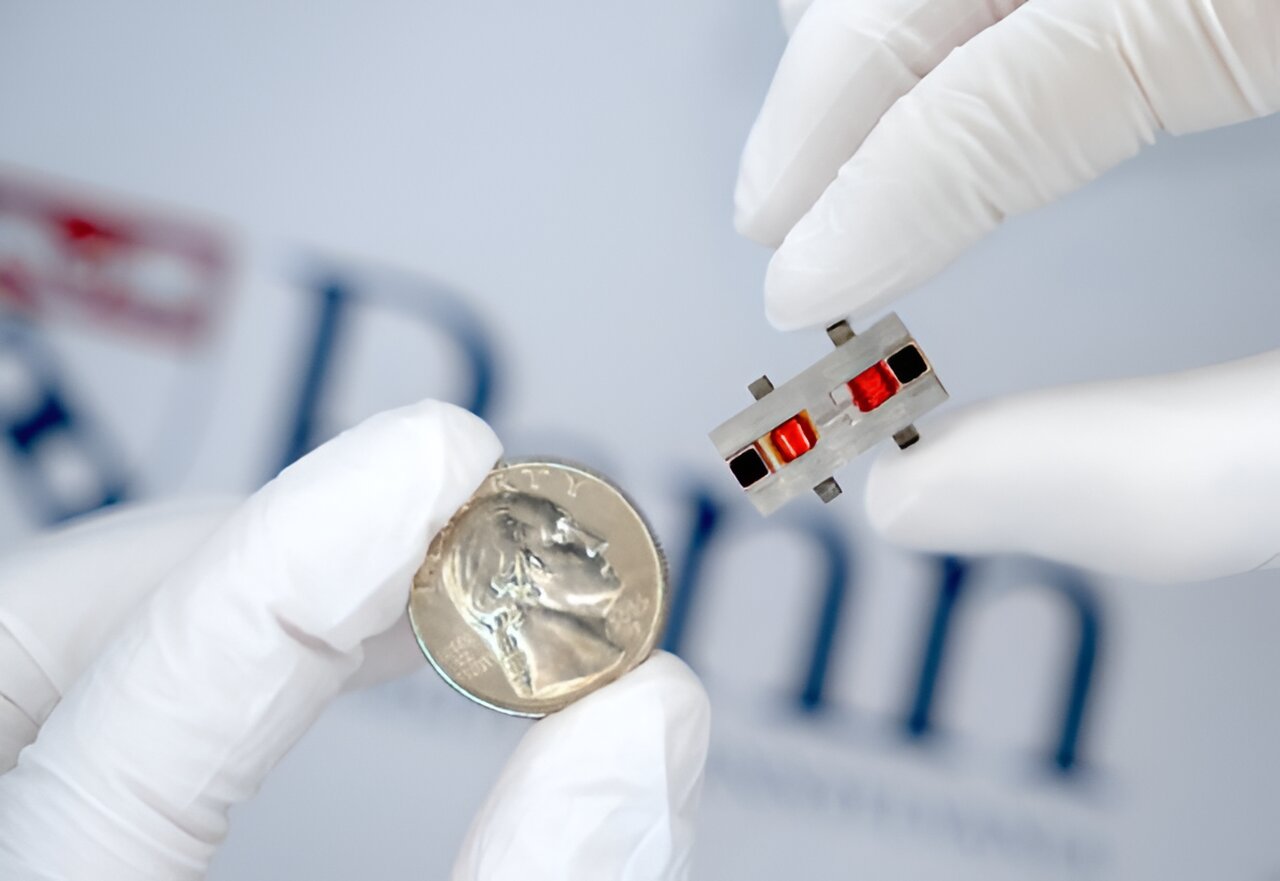

Engineers find way to protect microbes from extreme conditions

Credit: Pixabay/CC0 Public Domain Microbes that are used for healthcare, agriculture or other applications must be able to withstand extreme conditions and ideally the manufacturing processes used to make tablets…